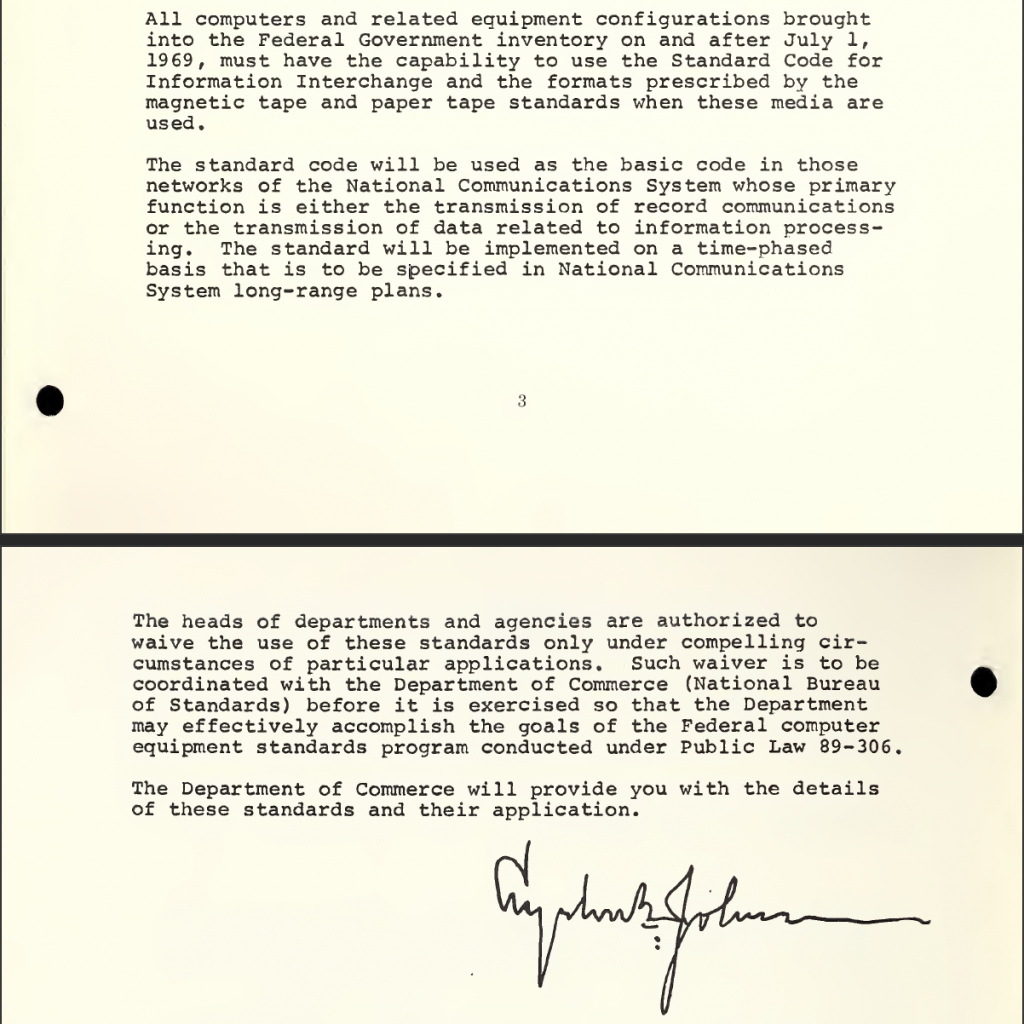

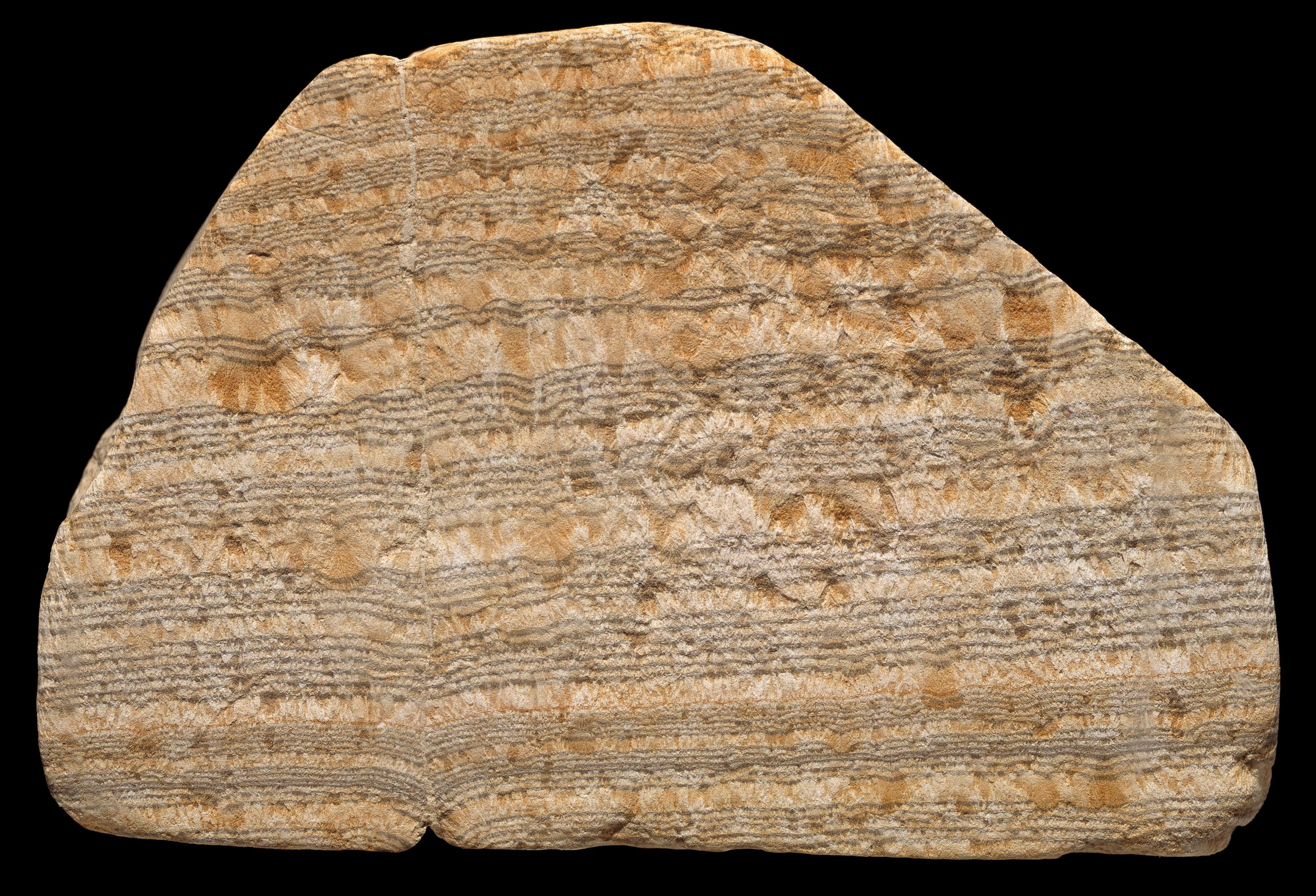

Sometime in August 1769, miners at Mearn’s Pit in High Littleton, Somerset discovered that a rectangular drainage pipe they had constructed from elm wood was blocked. As in many mines, removal of water was a problem; so was ventilation. Around the end of 1766 they had sunk a new shaft to improve the airflow, but this brought more water coursing down the walls. They tackled this problem by installing lightly inclined wooden gulleys on the mine’s four sides to direct the flow to the 7.5 x 4 inch pipe. In turn, the pipe would carry the water off to a passage out of the mine about 42 feet below, probably pumped out by a Newcomen Engine – the early steam engine typically used at the time for this purpose. Less than three years later, their drainage system had begun to fail, prompting them to excavate and examine the pipe. This is a drawing of a cross-section of the pipe as they found it:

It had become so scaled up with minerals that the water could not drain fast enough. The deposit had a certain peculiarity: it was striped with alternating dark and light bands quite evenly throughout, but for a point where a nail had penetrated the pipe’s side.

A sample of the deposit from Mearn’s Pit came into the hands of the Reverend Alexander Catcott of nearby Bristol, a theologian with an interest in fossils and geological strata who had just published the expanded second edition of his Treatise on the Deluge (1768). Fossil traces of apparently aquatic forms far from any ocean had of course, since antiquity, fuelled the idea in many cultures of a great primeval flood. Now, as ever-deeper mining operations driven by capitalist industry brought heightened awareness of the Earth’s strata and the fossils with which they were associated, the gentleman scientists of the era were increasingly puzzling over implications for the Genesis narrative in the pages of such publications as the Royal Society’s Philosophical Transactions.

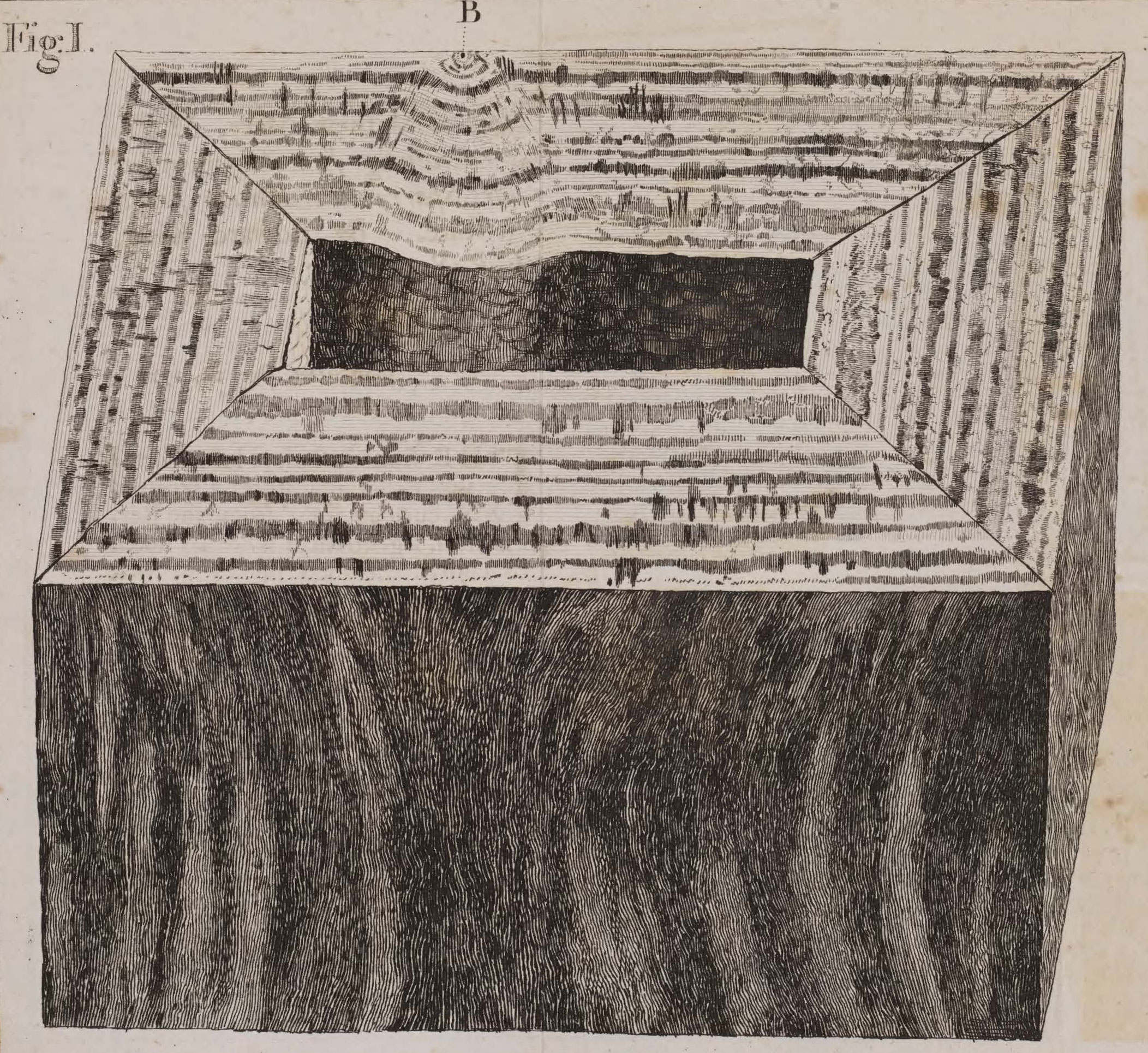

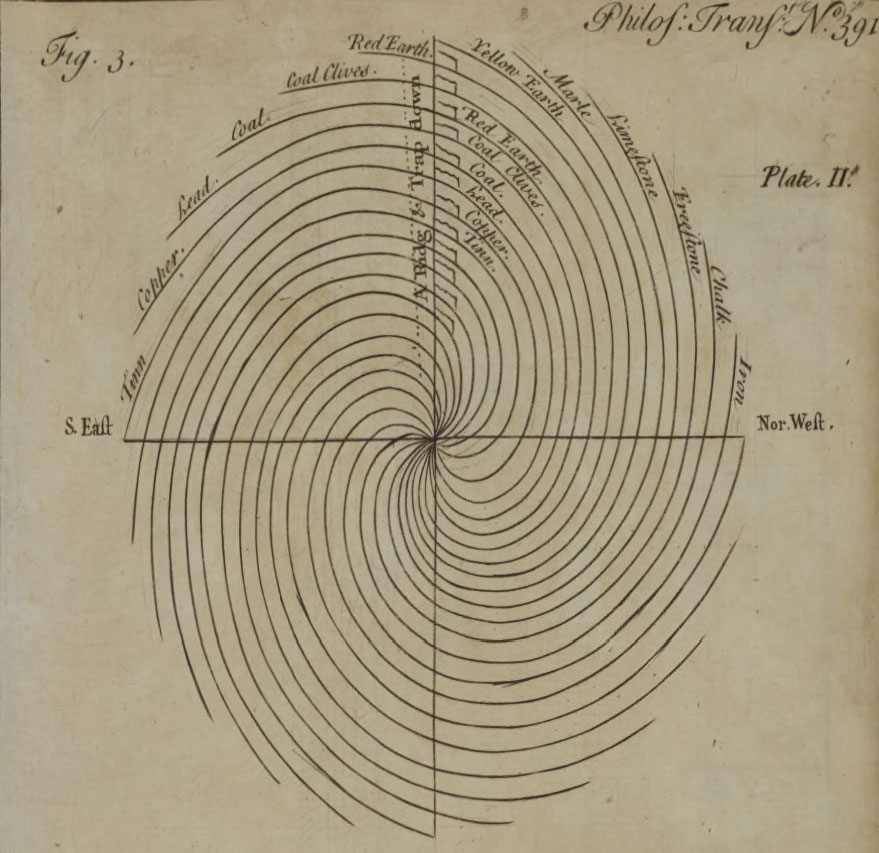

Indeed, already in 1719 local landowner John Strachey, who had developed an interest in extracting the coal under his estates, had written to the Society with his observations about the regularity of strata in the area around High Littleton. Strachey diagrammed the regular, sloping bands.

Of particular interest to those prospecting for coal, was that the regularity of these layers, always sloping towards the South East, made the location of veins predictable. While most mining since antiquity had occurred near the surface of the Earth, with knowledge like this deeper mines could be sunk with greater certainty of a return. A few years later, in 1724, Strachey followed up with another Philosophical Transactions article, further diagramming the Somerset strata and proposing a bold theory of their formation: the planet had been constituted with layers radiating from its centre at Creation, but its spin had caused them all to twist, furled one upon the other, ‘like the winding up of a Jack, or rolling up the Leaves of a Paper-Book’. There were 24 layers – one for each hour of the day – which had ticked by in a daily cycle ever since.

Strachey had granted a mining concession in 1719 to a neighbouring landowner, William Jones, who married his sister Elizabeth. Their daughter, Mary Jones, inherited the estate and coal property at High Littleton, and was one of eight local landowners involved with Mearn’s Pit a half century later when the pipe blocked and the strange, striped rock fell into Catcott’s hands.

From Catcott, the stone was passed to Edward King, an antiquarian and also a Fellow of the Royal Society, who wrote up his finding and diagrammed it in the image with which we started this article. Like Catcott and Strachey, King was much concerned with the implications of geological phenomena like regular strata and fossils for the Genesis narrative, and had offered his own speculations to the Society a few years earlier in ‘An attempt to account for the universal deluge’ (1767). In the terms of the proto-geological debates of the day, King was a Plutonist or volcanist: without quite contradicting the idea of a primeval flood, he proposed volcanic activity as the transporter of aquatic fossils from ancient sea beds to mountaintops. The opposing theory was that of the Neptunists, who held the continents to have precipitated out of ancient waters – but, King asked, where did all that water go? Similar debates can be traced all the way back to figures of the Islamic Golden Age such as Avicenna, and to some extent to Strabo, a Greek thinker writing at the time of the shift from Roman Republic to Empire. King was also a catastrophist, in that he took this volcanic movement of seabeds to have occurred in a single gigantic event which might also account for the deluge – with such an upheaval, there would, after all, have been a lot of water sloshing around. Within decades the Plutonists would win out as the modern scientific discipline of geology cohered, but catastrophism would give way to the gradualism of King’s more famous contemporary James Hutton and his heirs, such as Charles Lyell.

In 1791, Mary Jones died. According to her will, the estate and colliery were to go to a cousin, and in the execution of this a land surveyor was required, for which they hired a young man named William Smith; by the next year he was surveying Mearn’s Pit. Smith seems to have gained access to Strachey’s papers on the geological strata of the area – likely through the close family connection of his employer – and to have drawn on them in his own enquiries. It was in this work at Mearn’s Pit that he first developed the geological understanding which would eventually enable him to create his famous stratigraphic maps of Britain; the 1815 version was the first of any whole country.

Key to this was the orderly, predictable sequence of layers and associated fossils, a layering which, in his work and that of his scientific heir and collaborator John Phillips, would provide the basis for geological periodisations – Paleozoic, Mesozoic – still in use to the present. Thus Mearn’s Pit may be seen as a central location in the formation of a new notion of time, caked in the composition of the planetary crust; a time that is deep, structured in superposed layers, descending from the present surface down into the ancient past; a geological time that would come to be recognised as far greater than the biblical scales people were accustomed to, and which would provide an important basis for Darwin’s work a few decades later.

As to the peculiar rock that was clogging the pipework, King, for his part, does not seem to have put much thought into explaining its stripes. He attributed them to ‘the water bringing, at different times, more or less oker along with the sparry matter’. But his specimen seems to have been an example of what miners themselves called ‘Sunday stone’; indeed his is the earliest record of that stone I have been able to find. This stone often formed in the drainage pipes of mines as pale coloured lime was deposited by the water flowing through them. This lime would be tainted regularly by the coal dust that filled the air when miners were working. The resulting rock – a pure by-product of the labour process – could thus be a fairly good record of working hours in a particular mine, with dark stripes for working hours, separated by thin pale bands. Since miners typically worked six-day weeks, there was a pattern of six fairly even pairs of stripes, followed by a thicker pale band for Sundays – hence the name. Holidays too were marked in this mineral timesheet. Here was another temporality inscribed in the layered rocks of Mearn’s Pit, on a scale far different to the geological one that would emerge from the work of stratigraphers like Smith. This was the time of the capitalist labour process, caked in rock.

A younger contemporary of Smith’s, Robert Bakewell, would publish his Introduction to Geology in 1815, the same year as Smith’s map. Bakewell was familiar with Sunday stone and noted a certain analogy in a discussion of ‘the formation of the superficial part of the globe’. If the Earth’s strata could have been formed through ‘successive igneous and aqueous eruptions forced through craters and fissures of the surface’, which would each have deposited a new layer of rock, this layering could be seen in microcosm in a certain artefact:

To compare great things with small, there is an analogous formation taking place every day in the channels which receive the boiling waters from some of the steam-engines in the county of Durham. This water contains a large quantity of earthy matter which is deposited every day, except Sunday, in regular layers that may be distinctly counted, with a marked line for the interval of repose on Sunday, between each week’s formation: hence the stone got out of these channels has received from the country people the name of Sunday stone.

Thus Sunday stone appeared as an example of geological time in microcosm, the strata deposited during regular cycles of work and rest analogous to those deposited during phases of volcanic activity and inactivity. If the regular, measured time of the working week gave Sunday stone its peculiar form, materially encoding its periods in stripes legible to miners, the same may be possible with geological strata, reading from their sequence the time of the Earth itself. The mine was at that point the primary means for the development of geological knowledge – and, it turned out, even its labour process could supply a model of deep, structured time.

By the 1870s, according to Francis Buckland’s Curiosities of Natural History, King’s specimen had made its way to the British Museum’s North Gallery No. III. Marx was still working in the reading rooms, so it seems reasonable to wonder if he encountered the stone, and what he might have made of it. Marx read the work of the geologists that emerged in part from Mearn’s Pit: he engaged fairly substantially with Phillips – and Smith gets the odd mention – in the notebooks of March–September 1878; he was well aware of the stratigraphic science of the moment. But King was a minor, eccentric figure from a previous age. Bakewell was more significant, but by the time Marx looked seriously at geology his introduction was out of date; he studied the 1872 edition of Joseph Beete Jukes’s Student’s Manual of Geology instead.

Sunday stone was known somewhat in British culture by the mid nineteenth Century, apparently more from miners themselves than the more famous geologists, to whom it would perhaps have remained a mere curiosity. It was mentioned with characteristic moralism in Christian children’s literature, as God’s record of labour time in admonitions to observe the Sabbath – as if a religious injunction was required for miners to desire a day out of the pits. But this stone seems, on the contrary, an exemplary materialist object, for here we find capitalist social forms already – in the belly of the Industrial Revolution – meshing with geology, leaving traces in the crust, the most literal announcement of the Capitalocene; labour time fossilised like the rings of an ancient tree, at a key site in the discovery of geological temporality; a time written in the black of fossil fuel; a time that congealed until the production process itself broke down.

Read on: Adam Hanieh, ‘Petrochemical Empire’, NLR 130.